はじめに

Open Model Zoo内のDemoに格納されている、monodepth demo(Python版) を使ってみましょう。以下の記事の関連記事となります。

環境

今回はmacOSで実行してみます。

MacBook Pro (13-inch, 2018, Four Thunderbolt 3 Ports)

2.7 GHz クアッドコアIntel Core i7 メモリ16 GB

macOS Big Sur 11.1

Python 3.7.7

openvino 2021.2.185モデルの確認

models.lstを開いて、使用するモデルを確認します。fcrn-dp-nyu-depth-v2-tf、midasnetの2つが利用出来る事がわかります。モデル未入手の場合は、モデルダウンローダーを使って入手してください。(前回記事を参考)

# This file can be used with the --list option of the model downloader.

fcrn-dp-nyu-depth-v2-tf

midasnetヘルプの確認

% python3 monodepth_demo.py -h

usage: monodepth_demo.py [-h] -m MODEL -i INPUT [-l CPU_EXTENSION] [-d DEVICE]

optional arguments:

-h, --help show this help message and exit

-m MODEL, --model MODEL

Required. Path to an .xml file with a trained model

-i INPUT, --input INPUT

Required. Path to a input image file

-l CPU_EXTENSION, --cpu_extension CPU_EXTENSION

Optional. Required for CPU custom layers. Absolute

MKLDNN (CPU)-targeted custom layers. Absolute path to

a shared library with the kernels implementations

-d DEVICE, --device DEVICE

Optional. Specify the target device to infer on; CPU,

GPU, FPGA, HDDL or MYRIAD is acceptable. Sample will

look for a suitable plugin for device specified.

Default value is CPU実行してみる

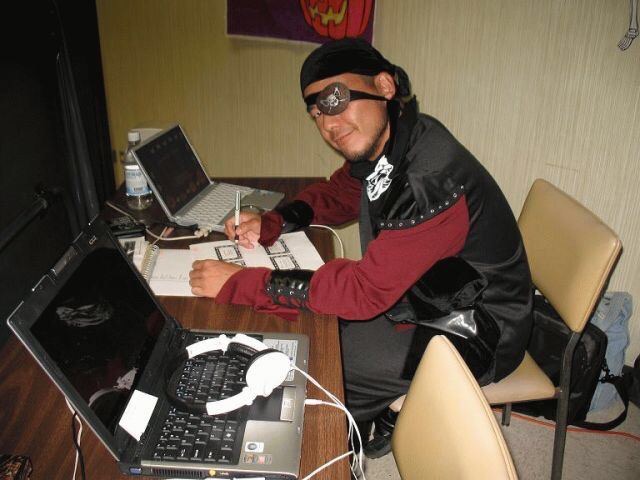

入力画像は以下を使いました。Suzuki Bandit 250vです。

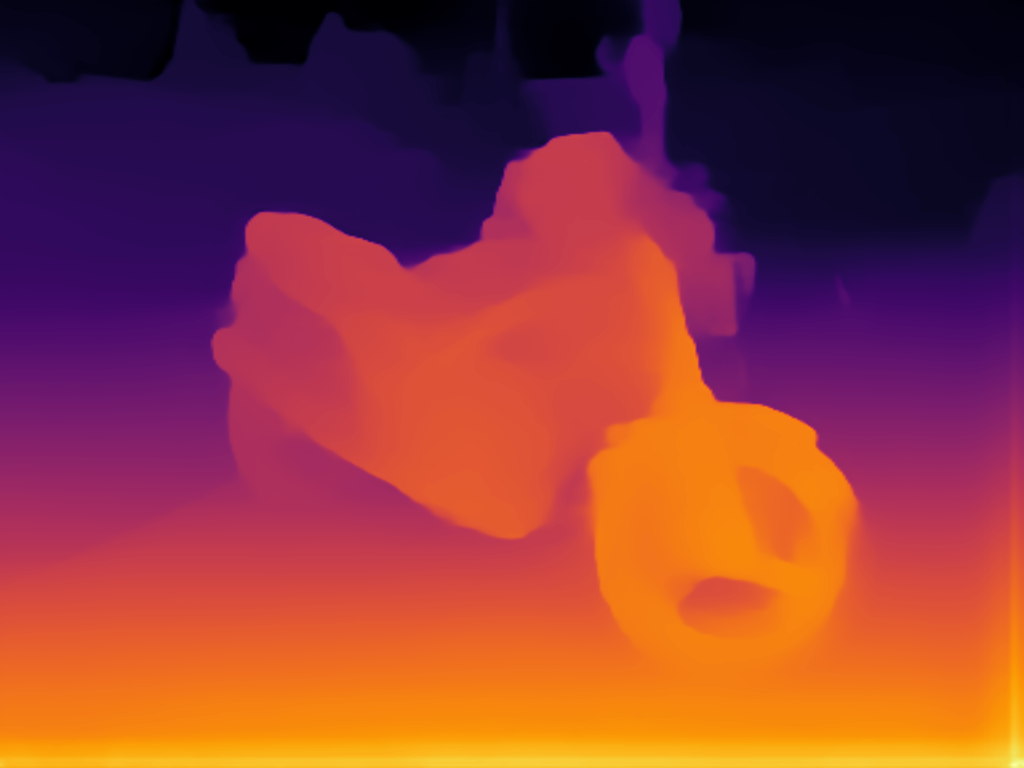

モデル:fcrn-dp-nyu-depth-v2-tf で実行

% python3 monodepth_demo.py -m /public/fcrn-dp-nyu-depth-v2-tf/FP16/fcrn-dp-nyu-depth-v2-tf.xml -i IMG_0958.jpg

[ INFO ] creating inference engine

[ INFO ] Loading network

[ INFO ] preparing input blobs

[ INFO ] Image is resized from (768, 1024) to (228, 304)

[ INFO ] loading model to the plugin

[ INFO ] starting inference

[ INFO ] processing output blob

[ INFO ] Disparity map was saved to disp.pfm

[ INFO ] Color-coded disparity image was saved to disp.png

[ INFO ] This demo is an API example, for any performance measurements please use the dedicated benchmark_app tool from the openVINO toolkit

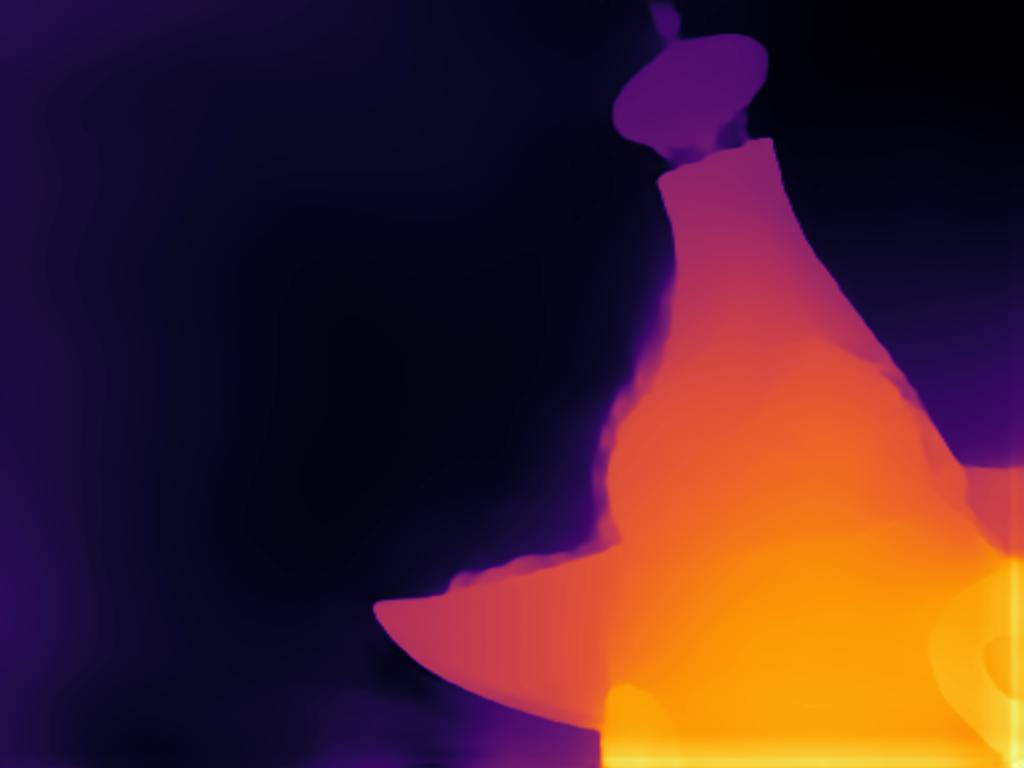

モデル:midasnet で実行

% python3 monodepth_demo.py -i IMG_0958.jpg -m /public/midasnet/FP16/midasnet.xml

[ INFO ] creating inference engine

[ INFO ] Loading network

[ INFO ] preparing input blobs

[ INFO ] Image is resized from (768, 1024) to (384, 384)

[ INFO ] loading model to the plugin

[ INFO ] starting inference

[ INFO ] processing output blob

[ INFO ] Disparity map was saved to disp.pfm

[ INFO ] Color-coded disparity image was saved to disp.png

[ INFO ] This demo is an API example, for any performance measurements please use the dedicated benchmark_app tool from the openVINO toolkit

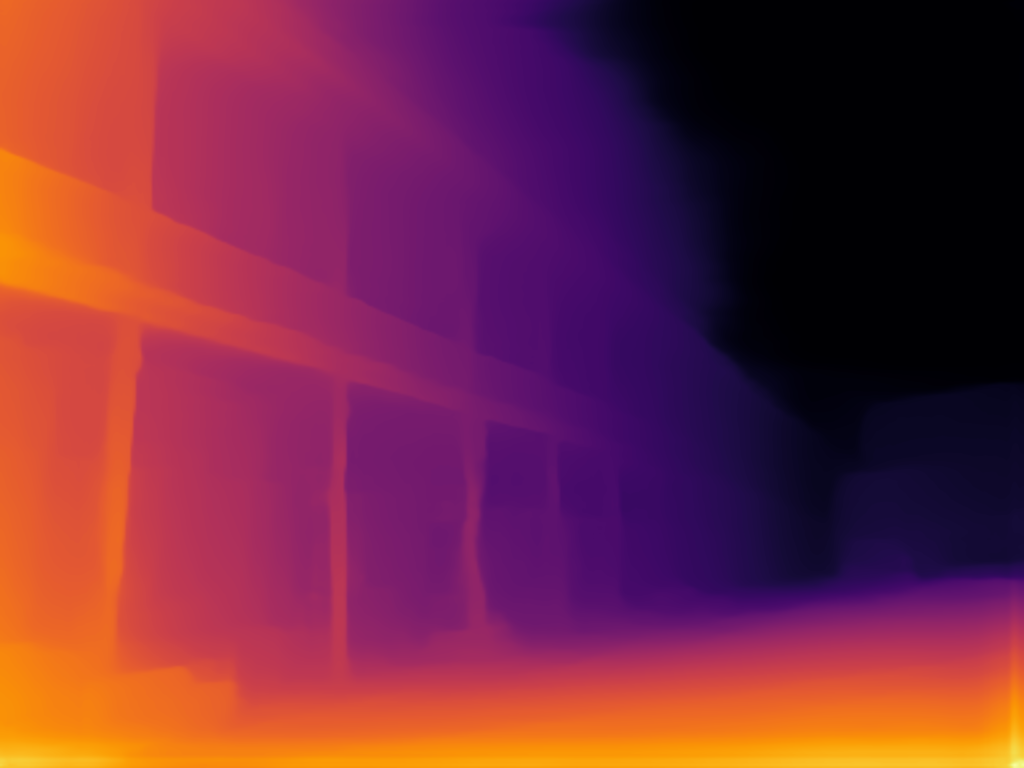

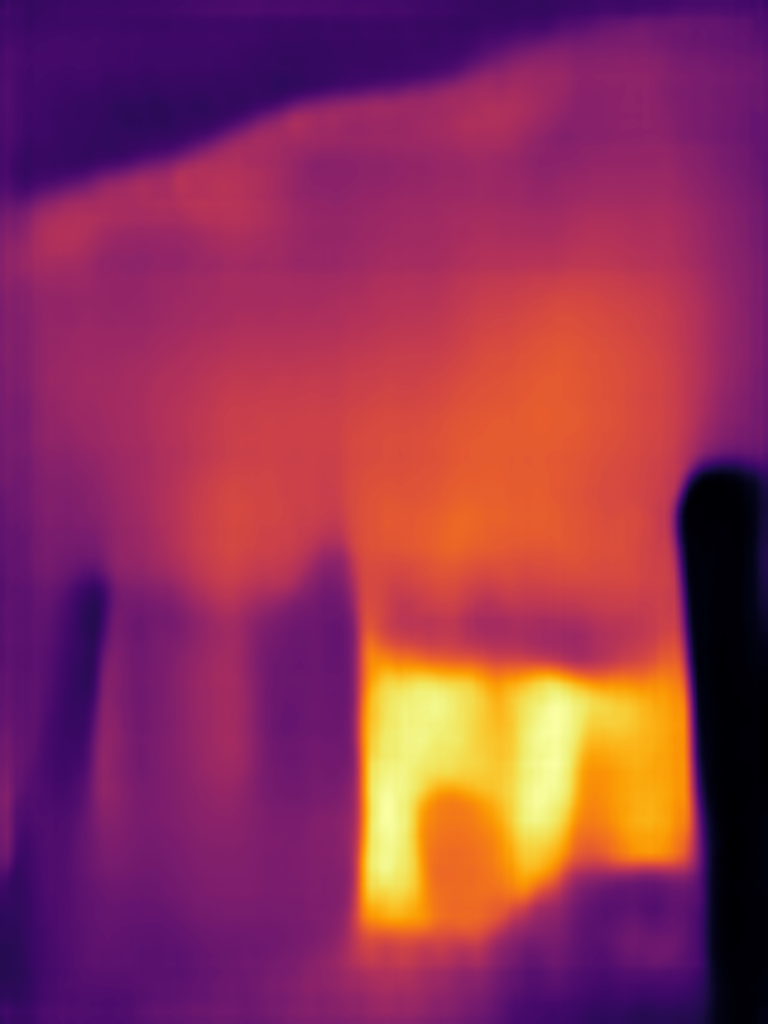

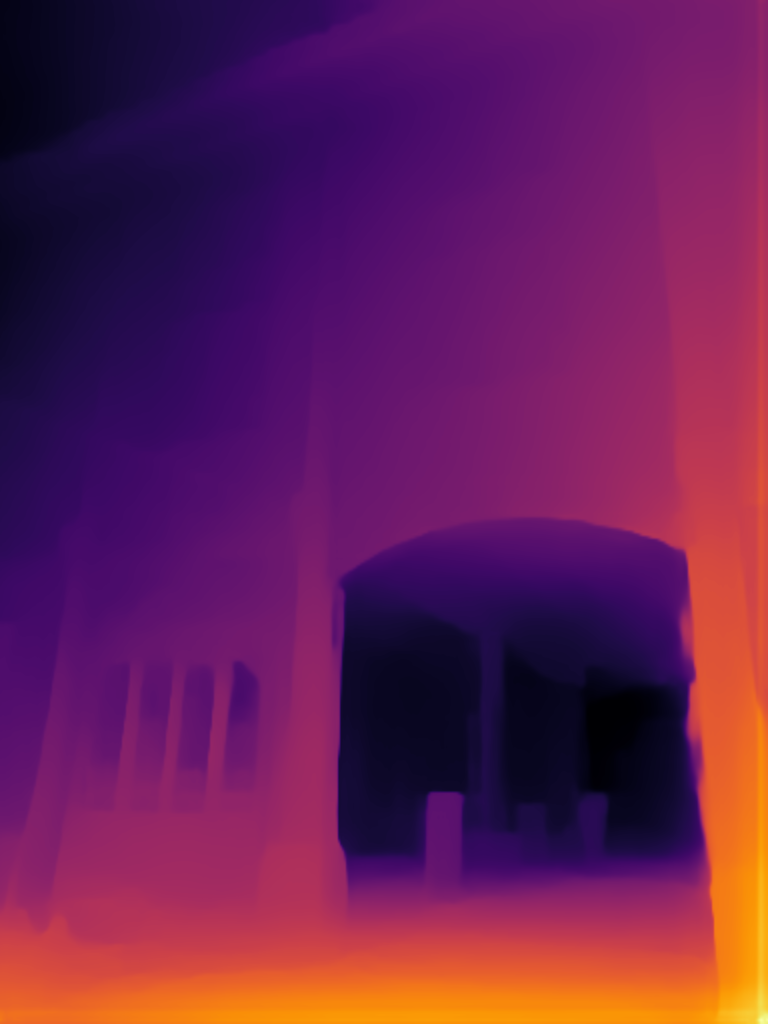

様々な画像で実行してみる

様々な画像に対して、fcrn-dp-nyu-depth-v2-tf、midasnetのモデルでmonodepth_demo.py を実行してみます。

フリーのITエンジニア(何でも屋さん)。趣味は渓流釣り、サッカー観戦、インラインホッケー、アイスホッケー、RaspberryPiを使った工作など。AI活用に興味があり試行錯誤中です。