OpenVINO 2023.1 がリリースされています

https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/whats-new.html

Stable Diffusionがプレビュー版でサポートされていますね

What’s new in 2023.1?

PyTorch solutions further enhanced and no need to convert to ONNX for deployment.

- PyTorch Model Support – Developers can now use their API of choice – PyTorch or OpenVINO for added performance benefits. Now, users can automatically import and convert their PyTorch models using the convert_model() API for use with native OpenVINO toolkit APIs.

- torch.compile (preview) – Developers can now use OpenVINO as a backend through PyTorch torch.compile, empowering developers to utilize OpenVINO toolkit through PyTorch APIs. This feature has also been integrated into the Automatic1111 Stable Diffusion Web UI, helping developers achieve accelerated performance for Stable Diffusion 1.5 and 2.1 on Intel CPUs and GPUs in both Native Linux and Windows OS platforms.

- Optimum Intel – More Gen AI coverage and frameworks integrations to minimize code changes: Hugging Face and Intel have continued to enhance top generative AI models by optimizing execution, making your models run faster and more efficiently on both CPU and GPU. OpenVINO toolkit serves as runtime for inferencing execution. We’ve also enabled new PyTorch auto import and conversion capabilities along with added support for weights compression to further performance gains.

Broader LLM model support and more model compression techniques

- Enhancing performance and accessibility for Generative AI: Significant strides in enhancing runtime performance and optimizing memory usage, particularly for LLMs. We’ve enabled models used for chatbots, instruction following, code generation, and many more including prominent models like BLOOM, Dolly, Llama 2, GPT-J, GPTNeoX, ChatGLM, Automatic1111, Open-LLAMA and Lo-Ra.

- Improved LLMs on GPU – Expanded model coverage with dynamic shapes support, further helping the performance of generative AI workloads on both integrated and discrete GPUs. As well as improved memory reuse and weight memory consumption for dynamic shapes.

- Neural Network Compression Framework (NNCF) – Now includes 8-bits weight compression method making it easier to compress and optimize LLM models. Includes added SmoothQuant for more accurate and efficient post-training quantization for Large Language Models. This is now the recommended compression tool (as opposed to our Post Optimization Training tool).

More portability and performance to run AI at the edge, in the cloud or locally

- Full support for Intel® Core™ Ultra processor (codename Meteor Lake) – This new generation of Intel CPUs is tailored to excel in AI workloads with a built-in inference accelerator, Intel AI Boost (Neural Processing Unit or NPU plug-in) and have the option of running AI workloads on both iGPU, and CPU. Opt for the new NPU plug-in feature as an add-on install with your chosen OpenVINO 2023.1 package.

- Integration with MediaPipe – Direct access to this framework and easily integrate with OpenVINO™ Runtime and OpenVINO™ Model Server to enhance performance for faster AI model execution. Benefit from seamless model management and version control, custom logic integration with additional calculators and graphs for tailored AI solutions, allowing developers to scale faster with the ability to delegate deployment to remote hosts.

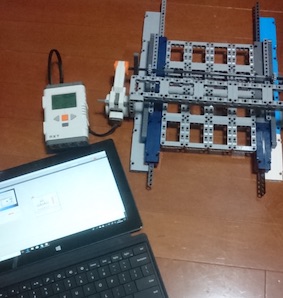

産業用画像処理装置開発、

ゲームコンソール開発、半導体エンジニアなどを経て、

Webエンジニア&マーケティングをやっています

好きな分野はハードウェアとソフトウェアの境界くらい